Synthetic media refers to algorithmically generated or modified content that simulates authentic human production. Powered by generative AI models, such content now encompasses deepfake videos, cloned audio, synthetic news, and entirely artificial social personas. These systems erode the boundary between authentic information and computational fabrication.

The U.S. economy faces a twofold exposure: reputational destabilization and transactional exploitation. Financial markets increasingly rely on real-time data feeds and sentiment analytics. Synthetic media can distort those inputs, leading to manipulated valuations, disinformation-driven volatility, and loss of investor confidence. At the micro level, fraud networks use AI-generated personas and voice models to impersonate executives or clients, enabling sophisticated business email compromise and financial theft.

Synthetic media undermines economic trust—the intangible infrastructure of capitalism. Once falsified information can move markets, counterfeit credibility becomes an economic weapon. This analysis therefore treats synthetic media not as an isolated cybersecurity issue but as a cross-domain economic threat, intersecting information integrity, trade, and governance.

1. Synthetic media is being used for financial and reputational manipulation. Fabricated news, cloned voices, and false CEO statements cause market fluctuations and investor confusion. These incidents demonstrate synthetic media’s ability to distort economic signals in real time, affecting valuations and public confidence.

2. The current U.S. response is fragmented and reactive. Regulatory and institutional measures lag behind the speed of synthetic media innovation. Agencies such as the FTC and SEC have limited technical capacity to detect algorithmic falsification, creating an environment where synthetic manipulation can outpace enforcement.

EdgeTheory source reliability tracker

Reliability rates expose that Western economic narratives are under pressure due to amplification of recession narratives and increased tariffs. Source fidelity rates vary: Voice of America’s September 11 report on U.S. recession signals scores 7, while highlighting underlying economic strains. Bloomberg’s September 15 article on U.S. outperformance scores 5 but it downplays the amplified narrative of economic issues with tariffs. Global Research’s September 24 analysis falsifies shifts in the international order with increased anti-western narratives seeking to bolster Russia and China against Western fragility. Overall, fidelity rates are not being amplified on a large scale. High-fidelity sources anchor understanding amid polarized narratives and geopolitical rifts. Meanwhile, low-fidelity sources are underperforming in the U.S. economic infosphere.

X post highlighting deepfake of Spencer Hakimian

A deepfake video of Spencer Hakimian, founder of Tolou Capital Management, circulated on X on June 2, 2025, promoting a fraudulent Solana token. The AI-generated clip convincingly mimicked Hakimian and presented him endorsing decentralized finance to target crypto investors. The post received engagement of 68.3k views, 1.3k likes, 235 reposts, and 183 replies. Hakimian’s June 3 post warned followers to ignore such pitches and highlighted the imminent threat of synthetic media: he foresees scenarios where forged videos of prominent financiers could drive hype and extract billions. This post underscores the growing volume of deepfakes used to undermine trust in high-velocity financial markets that enable scammers to go viral overnight. With regulatory frameworks lagging, verification and skepticism are critical defenses. The post increased amplification of discussion on AI watermarking, platform responsibility which signals exposure and rapid detection as defensive measures against synthetic media and deepfake materials.

Deepfake Facebook post of Anthony Bolton

A fraudulent Facebook featuring a deepfake of Anthony Bolton predicts “everyone will hold these three stocks over 20% by end of 2025.” The deepfake video touts gains in AI stocks across 30 handpicked equities. This post uses synthetic scripting, highlights urgent news, and creates false proof that seeks to drive retail FOMO. These strategies orchestrate pump-and-dump cycles that have cost U.S. investors $450 million in Q3 2025, inflating micro-cap AI names by 50% before engineered crashes that cut 401(k) balances by 12% on average. This post exemplifies increased circulation of synthetic financial material meant to manipulate perception, distort capital flows, and impose systemic economic costs.

Synthetic video of David Kostin promoting Nvidia

A deepfake of Goldman Sachs strategist David Kostin appeared on Instagram in March 2025, falsely claiming Nvidia held a 35 percent market share to drive fraudulent investments. The clip showed how synthetic media now infiltrates financial communication and can move markets through manufactured authority. By imitating David Kostin, Chief US Equity Strategist at Goldman Sachs, the deepfake manipulates perception and injects false confidence into retail stocks which erodes market integrity and transparency. The economic impact of deepfake manipulation has skyrocketed. This creates pressure for fraud prevention systems and compliance budgets across economic sectors. AI-generated scams are projected to extract $40 billion from U.S. institutions. These synthetic voices are shaping trading behavior, mislead capital flows and create distortion of valuation cues which weakens investor trust.

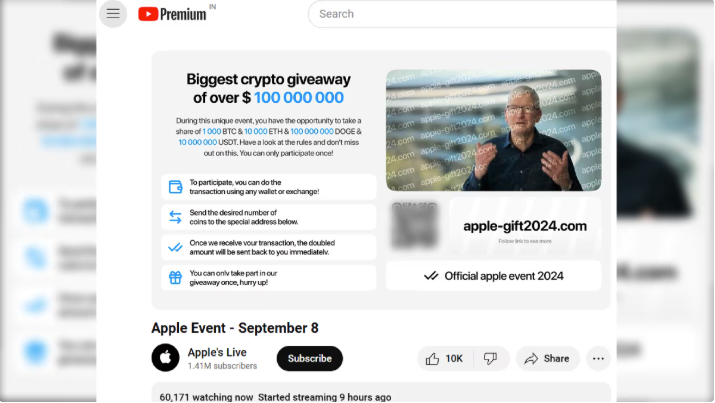

Falsified Youtube Synthetic Media Livestream featuring Tim Cook

A fraudulent YouTube livestream posed as Apple’s Tim Cook September 8, 2024, event, promoting a “biggest crypto giveaway” that claimed to double participants’ investments up to $100,000. This livestream instructed victims to send Bitcoin or USDT to a designated wallet for further investment use. The video used deepfake techniques to replicate the visual and audio style of an official Apple broadcast, including logo overlays, presenter mimicry, and event-style graphics, creating a veneer of legitimacy designed to manipulate viewer perception. This synthetic announcement is a microcosm of increased amplification that converts trust into irreversible crypto transfers. Cryptocurrency-related frauds now inflict measurable economic damage: the FBI reported $5.6 billion lost in 2023 alone, a 45 percent increase from 2022, generating direct financial losses for individuals, eroding consumer confidence in digital platforms, reducing engagement with legitimate financial innovations, straining household budgets, and inflating regulatory and victim-support costs.

AI deepfake of BlackRock Investor Jean Bolvin

A BlackRock video featuring Jean Boivin, Head of BlackRock Investment Institute, uses AI-generated or heavily synthetic visuals and audio to simulate authoritative market commentary. The content mimics real broadcasts and creates a perception of credibility designed to influence investors’ behavior at scale. These synthetic signals drive measurable financial outcomes. Quarterly inflows into BlackRock-managed ETFs exceed $500 billion, inflating S&P 500 valuations, lowering corporate borrowing costs, and boosting private infrastructure investment. The engineered messaging concentrates on wealth among top households and increases systemic fragility. In downturns, coordinated withdrawals triggered by these artificial cues could lead to contract credit, exacerbated deficits, and amplified market volatility. The video exemplifies how synthetic financial media functions as a direct amplifier of capital flows, investor sentiment, and U.S. economic dynamics.

EdgeTheory’s image trace and narrative classifiers are optimal tools to identify misleading synthetic content. The image trace takes the image and compares it across all sources within EdgeTheory’s real-time full-corpus registry. After comparing the image to thousands of articles the EdgeTheory image trace will provide all current sources with the image embedded. Lastly, EdgeTheory’s narrative classifiers identify amplifications that increase incitement, track factual fidelity, and source reliability. This allows sources to be compared between their current impact, engagement, and fidelity. U.S. economic deepfakes in this paper were identified via these tools.

Synthetic media represents a systemic economic threat, not a peripheral cybersecurity issue. Evidence from deepfakes targeting executives, strategists, and tech leaders demonstrates that fabricated content can manipulate market perceptions, drive fraudulent transactions, and distort capital flows. Cases involving Spencer Hakimian, Anthony Bolton, David Kostin, Tim Cook, and Jean Boivin show that synthetic signals can move billions in real time, eroding investor confidence and creating vulnerabilities that traditional regulatory and institutional frameworks cannot quickly counter. The U.S. economy’s reliance on instantaneous data and automated decision-making amplifies exposure, making detection, verification, and proactive mitigation critical for market stability.

Current responses are fragmented and reactive, leaving structural gaps in oversight and economic resilience. Enforcement agencies such as the SEC and FTC lack technical capacity to track or verify AI-generated content at scale, while industry adoption of synthetic media outpaces regulatory guidance. Detection tools, content classification systems, and public awareness campaigns are emerging defenses, but systemic adoption remains incomplete. EdgeTheory’s ability to track narratives, trace images, and monitor factual fidelity creates an apparatus built to support economic warfare strategy. Moving forward, economic security will increasingly depend on integrating AI forensics into financial infrastructure, enhancing cross-domain information integrity, and recognizing synthetic media as an active amplifier of both market opportunity and risk.

Lead Analyst: Connor Marr is an analyst at the EdgeTheory Lab. He is studying Strategic Intelligence in National Security at Patrick Henry College. He has participated in and led special projects for the college focusing on varied topics including: Cartels, Border Security, Anti-Human Trafficking, Debate of Cyber Strategy, and Chinese Infrastructural Projects in Africa.